Release of DeepSeek-TNG R1T2 Chimera

July 3rd, 2025

Today, we release DeepSeek-TNG R1T2 Chimera.

This new Chimera is a Tri-Mind Assembly-of-Experts large language model with three parents, namely DeepSeek's R1-0528, R1 and V3-0324.

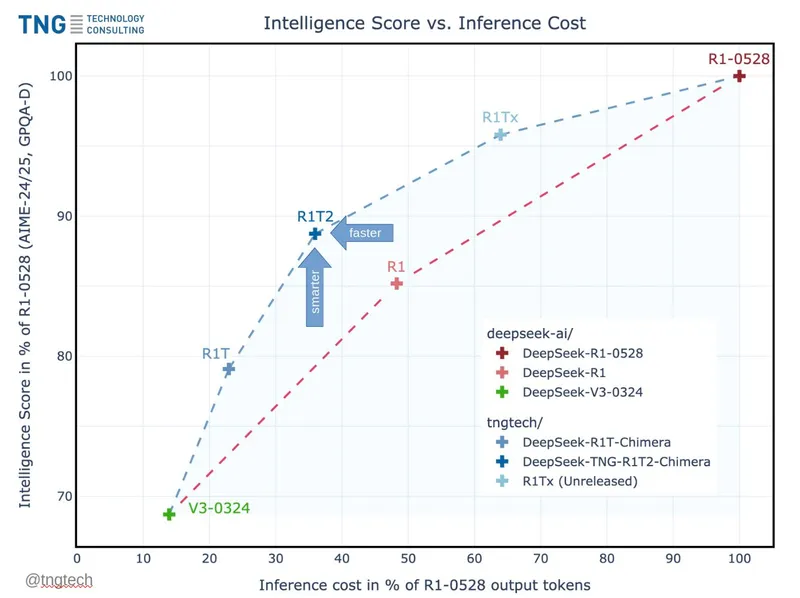

R1T2 operates at a sweet spot in intelligence vs. inference cost. It appears to be

about 20% faster than R1 and more than twice as fast as R1-0528,

significantly more intelligent than R1 in benchmarks such as GPQA-Diamond and AIME-24/25, albeit not quite on R1-0528’s level,

much more intelligent than our first R1T Chimera,

<think>-token consistent, which is a major improvement.

We perceive it as generally well-behaved and a nice persona to talk to. The weights are on Hugging Face under the MIT licence.

Thanks to DeepSeek AI for giving their models to the world, to Chutes, OpenRouter and Alex Atallah for hosting R1T and to the community for benchmarking, testing and using our Chimera models.